From the last post we have learned from our study that the bandwidth is not the only element affect your network experience. Here then comes the latency issue.

As we know, the data are transferred as binary signal through media. Media can be copper, fiber, or even the air. Because the signal itself transfers in the speed of light through fibers by long distance connection. But even light needs time to reach some places:)

for example the latency:

domestic: 3 – 30 ms

Trans-Pacific: 350 ms

Trans-Atlantic: 120 ms

By data connection, at first with such a long latency you will think about the delay of transferring. E.g. if you are working with Skype or other Video/Audio application, you will already notice the lagging of picture and voice.

By enterprise usage it’s much more complex:

The most of enterprise applications are based on TCP protocol to transfer the data between server and client. The mechanism of TCP is that the sender must wait for the receiver to acknowledge the sent data packet so that he can send the next batch of them. Therefore, time spent on each transfer is combined from three elements:

- connection build up, that can be neglected by big data transfer because it’s just a handshake between the sender and receiver

- sending time, it’s the Ethernet preparation time

- waiting time, it’s the round trip time for signal transferred on the physical path

Assume you have a 10Mbps Ethernet local connection, send data over Atlantic. Then the send time to prepare 1Kbytes data is about 10K*8/10M = 0.125 ms. And the wait time is 120ms. So the Ethernet preparation time can actually be ignored. Therefore the latency = almost the wait time. In this case the sender must wait for the most of time for sending out the next packet.

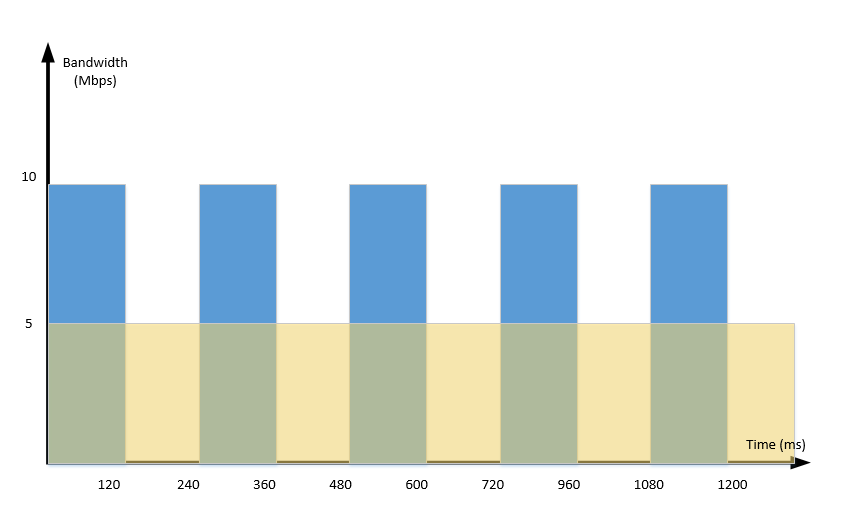

The below picture depicts a simple scenario that if you can send data for 120ms and have to wait for 120ms, then your bandwidth is like half of the actually available one. Now you have already see the importance of the latency. And in the actual world, the wait time is also dependent to the MSS and windows size of the TCP protocol setting. The maximal windows size is 65535 byte, that means a sender can maximal send out 65535 byte data in one batch. Then the bandwidth he actually has is 65535 byte / 120 ms, almost 4.2 Mbps bandwidth. No matter how many bandwidth he has, he can only reach this value.

Now you have already see the importance of the latency. And in the actual world, the wait time is also dependent to the MSS and windows size of the TCP protocol setting. The maximal windows size is 65535 byte, that means a sender can maximal send out 65535 byte data in one batch. Then the bandwidth he actually has is 65535 byte / 120 ms, almost 4.2 Mbps bandwidth. No matter how many bandwidth he has, he can only reach this value.

Latency exists anyway. How can we overcome the latency limitation?

To cook well you need the necessary good materials. Of course you need at first a good quality of line, which gives you possibility of a acceptable latency. Then the WAN optimization solution will help you to finally make use of your full bandwidth. We will come to these topics in the next posts.